What are Model Context Protocol and how does it work with Large Language Models? Discover how unifying all APIs under a single platform can transform AI performance, scalability, and security.

Imagine a chef preparing a signature dish. Everything flows smoothly when all the ingredients are ready in one place. But if the chef has to visit different shops for each ingredient, each with different rules and payments, the process slows down, and the dish suffers.

This is similar to how Large Language Models, when trying to work with disconnected systems, face delays and inefficiencies. They have the intelligence to deliver remarkable outcomes, but mismatched APls, repeated logins, and inconsistent data limit their potential. The result is slower performance, higher costs, and missed opportunities.

By connecting MCP with LLMs, we create a single, well-organized workspace where these models can access every tool and dataset they need quickly and securely. Instead of juggling multiple systems, the LLM can complete tasks-like checking customer orders, verifying payments, and analyzing trends-all in one smooth step.

“The key to unlocking Al's potential lies in seamlessly connecting intelligence with information.”

Model Context Protocol ensures your LLM-powered systems can access that information effortlessly, securely, and at scale.

What is MCP and How Does it Work to Empower LLMs

The Model Context Protocol integrations for LLMs framework is a universal communication standard enabling LLMs to interact with external tools and systems in a consistent, predictable, and secure fashion. This means no more building separate integrations for each tool or rewriting code every time a system changes.

Here’s what MCP brings to the table:

- Consistent data exchange through standard formats

- Centralized security enforcement with uniform authentication and authorization

- Standardized result formatting to maintain uniformity across outputs

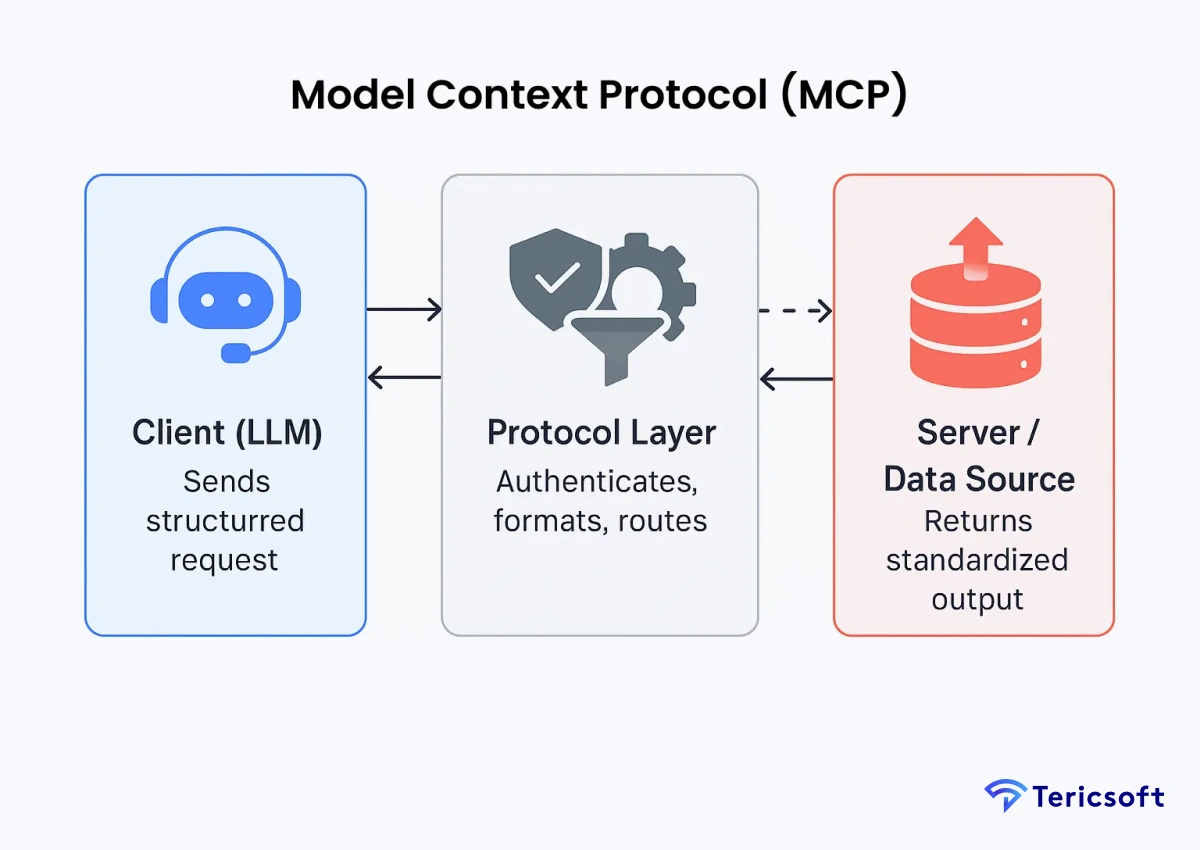

MCP functions through three interconnected components:

- Client (LLM) prepares and sends a structured request with clear parameters

- Protocol Layer authenticates, formats, and routes the request

- Server (Tool/Data Source) processes the request and returns a standardized, validated response

By implementing this structure, MCP transforms integration chaos into streamlined interoperability.

MCP vs API vs RAG: Real World Performance andScalability Insights

Comparing Model Context Protocol to traditional APIs and Retrieval-Augmented Generation (RAG) reveals significant differences in efficiency, security, and scalability.

“Al is the most powerful technology force of our time, and connecting it effectively will define its impact.”

— Jensen Huang, CEO of NVIDIA

MCP makes sure your Al connects right.

Benefits of MCP for LLM Technical Leaders

For technology leaders, Model Context Protocol for LLMs bring:

- Reduced Integration Complexity by replacing multiple custom connectors with one protocol layer

- Simplified Maintenance with all API and tool changes handled within the MCP layer

- Consistent Security Model ensuring unified authentication and permission handling

- Improved Performance through parallel data retrieval from multiple sources

- Scalable Architecture enabling new tool integrations without re-architecting

These benefits ensure organizations achieve faster go-to-market, reduce engineering overhead, and maintain security without slowing innovation.

When to Use MCP with LLMs for Maximum Impact

Imagine you’re the product manager for a global travel concierge app powered by an advanced LLM. A customer asks, "Plan me a weekend getaway in Italy with flights, hotels, and local experiences-stay within my budget and match my dietary needs."

Without MCP, your LLM scrambles to pull data from separate flight bookingAPIs, hotel systems, restaurant databases, and weather services each with different formats, login requirements, and processing speeds. The result? Slow, error-prone answers that frustrate the user.

With MCP, your LLM sends one structured request. The protocol securely fetches live flight prices, available hotels, nearby restaurants with vegan menus, and weather updates-processing them in parallel and delivering a perfect, cohesive plan in seconds. The user gets a seamless experience, and you save weeks of integration effort.

This is where MCP shines when your LLM needs to orchestrate multiple moving parts into one smooth, reliable interaction.

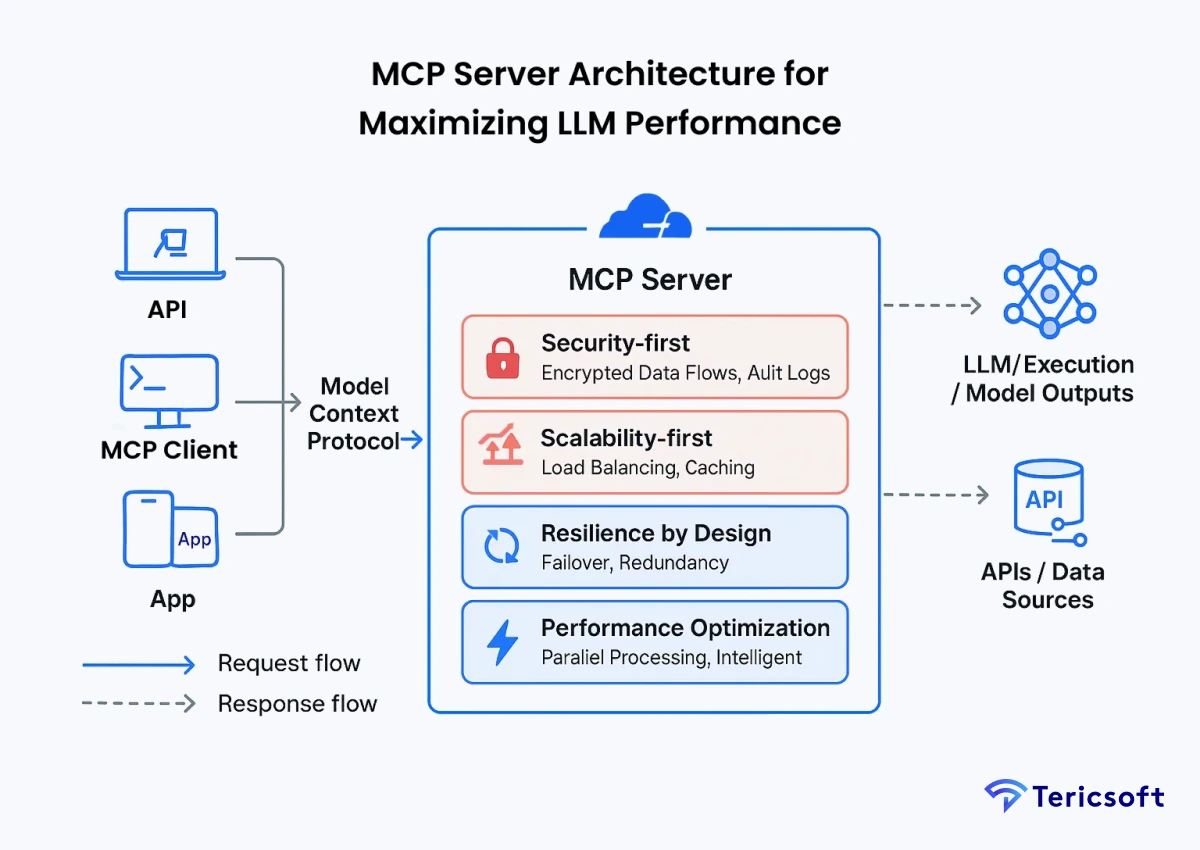

MCP Server Architecture Built for Maximizing LLM Performance

The Model Context Protocol for LLMs’ Server Architecture processes requests through a structured flow from Client to Protocol Layer to Server to Tool or Data Source. This layered approach ensures consistency, reliability, and adaptability.

- Security-first principles such as encrypted data flows, scoped permissions, and detailed audit logs

- Scalability-first engineering including horizontal scaling, intelligent caching, and load balancing to handle fluctuating workloads

- Resilience by Design with failover mechanisms and redundancy to maintain uptime during system failures

- Performance Optimization through parallel processing, data compression, and intelligent request routing

Security Challenges in MCP and LLM Workflows

Even with its strengths, Model Context Protocol alongside LLMs face potential threats that must be proactively managed. Here’s how we address them:

- Prompt Injection mitigated through request validation and sanitization

- Privilege Escalation prevented by enforcing least-privilege principles and RBAC

- Data Leakage countered with multi-layer authentication and continuous anomaly detection

- Man-in-the-Middle Attacks avoided via TLS encryption and certificate pinning

Real World Applications of MCP: Transforming Industries

Across industries, Model Context Protocol powered LLMs drive transformation:

- FinTech enhancing fraud detection by combining transactional, geolocation, and compliance data in real time

- HealthTech enabling HIPAA-compliant AI diagnostics that integrate EMR data, lab reports, and imaging results

- E-commerce delivering personalized recommendations by merging live inventory updates with behavioral analytics

- SaaS producing unified reports by orchestrating CRM, ERP, and analytics datasources

- EdTech enabling adaptive learning by integrating content delivery platforms, assessment tools, and analytics engines

Conclusion: Why MCP is Essential for LLM Success

In modern AI ecosystems, integration delays are avoidable. Model Context Protocol LLMs create a unified, secure, and scalable bridge between LLMs and the tools they need.