What is an Enterprise LLM? How private LLM systems combine retrieval, fine tuning, and agentic AI to create governed, domain-aware intelligence for high-stakes workflows in finance, healthcare, and SaaS.

In boardrooms across the UAE, USA, UK, and India, a pattern is beginning to repeat. Executives know Al is transformative. They approve budgets, join Al councils, and ask their teams for monthly updates. Yet when they look closely at their actual operations, their cycle times and customer experience metrics still look strangely familiar.

It is not for lack of investment. Around 88% of enterprises now invest in Al. Recent studies show enterprise AI adoption has surged, with 78% of organizations now using AI in at least one function, up from 55% the prior year. Crucially, companies report an average 3.7x return on investment for every dollar spent on generative AI. The global Al market is forecast to cross one trillion dollars within the next decade. Generative Al alone is projected to contribute trillions in economic value and uplift global productivity by levels rarely seen in modern history.

We are at a moment that echoes an older turning point. When electricity first arrived, people imagined brighter lights and mechanical tools. They did not imagine microprocessors, satellites, MRI scanners, or the global internet. Even the inventors could not foresee the full arc.

"Al is one of the most important things humanity is working on. It is more profound than fire or electricity."

— Sundar Pichai, CEO of Google

Enterprise leaders sense the same scale of possibility. Yet many still ask the foundational question that determines whether Al becomes a headline or a capability.

"How do we build an Al brain that understands our customers, our workflows, and our risk appetite, without surrendering control of our data or our future?"

This is the central idea behind the Enterprise LLM.

The Evidence Is Here: Enterprises Are Already Using Al At Depth

The world's leading companies have quietly moved beyond proofs of concept. They are operationalizing Al in revenue driving, brand defining, and risk sensitive workflows.

A few examples illustrate the shift:

- Large banks such as JPMorgan use AI for financial analysis and anomaly detection.

- Retail giants like Walmart embed AI into customer support, returns triage, and supply chain prediction.

- Industrial leaders such as Siemens use Al for predictive maintenance and lifecycle optimization.

- Automotive pioneers like Mercedes Benz use AI to personalize driving experiences.

- Product led companies like Reco and Perfect Corp embed Al in SaaS security and AR try ons.

These are not marketing pilots. They are real, regulated, operational systems.

Meanwhile, many other enterprises are still stuck in a different cycle: Small experiments with public LLMs. A chatbot that cannot access internal data. A proof of concept that struggles to pass an audit. A few demos in an innovation lab followed by quarter after quarter of stalled adoption.

The difference between these two groups is not access to models.

It is the decision to build an Enterprise LLM rather than relying only on public LLM APIs.

What Is an Enterprise LLM and Why It Matters Now

An Enterprise LLM is a large language model deployed in a private, fully controlled environment and deeply connected to an organization's systems, workflows, and governance policies. It usually begins with a foundational model such as GPT, Llama, Claude, Mistral, Cohere, Qwen, DeepSeek, or BERT. From there, it is adapted with contextual retrieval, fine tuning, agentic reasoning, prompt governance, and human in the loop checks.

Many people casually call this a private LLM, but the term Enterprise LLM is far more specific. It captures the idea of an intelligence layer that is customized, governed, domain aware, and architected for compliance driven environments.

Electricity once became invisible infrastructure. Enterprise LLMs follow the same trajectory. They become the intelligence grid beneath your workflows.

Two Scenarios That Illustrate What Enterprise LLMs Actually Do

Before we explore the architecture and implementation journey, it helps to ground the idea of an Enterprise LLM in real, lived workflows. Enterprises do not experience Al as a model or a dataset. They experience it as moments in a customer journey, decisions made faster, escalations resolved earlier, and teams supported with context they never had before. The following scenarios illustrate how an Enterprise LLM behaves inside an organization, how it collaborates with humans, and how it transforms workflows that were once bottlenecked by manual effort and fragmented information.

Scenario 1: A private Al brain for every customer

Imagine a FinTech institution that serves millions of customers across multiple geographies. Every customer journey leaves behind a complex trail:

- Support tickets in one system

- Transaction histories in another

- Relationship manager notes in a CRM

- Risk scores from internal engines

- Contracts, KYC documents, and regulatory clauses scattered across systems

When a customer reaches out, analysts usually scramble across dashboards, documents, and emails. A single escalation can take 10 to 20 days. Follow up actions often take 48 hours or more.

With an Enterprise LLM:

- A retrieval layer indexes every relevant document, policy, ticket, and customer trail.

- The Enterprise LLM, deployed inside the institution's private cloud or VPC, retrieves the exact context required.

- It generates a unified customer summary, suggests the next steps, drafts outreach emails, and can even trigger tasks in internal tools.

Suddenly, a 10 to 20 day process compresses to roughly five days. A 48 hour follow up becomes a matter of minutes.

The institution has not automated recklessly. It has built an intelligence layer that sees more, reasons better, and acts faster.

Scenario 2: Human aligned autonomy instead of black box automation

Consider a healthtech or insurance claims company that wants to automate case summarization, prior auth analysis, or document validation. Full automation is impossible. These actions are compliance critical.

An Enterprise LLM creates a safe middle ground:

- It drafts the first version of the analysis.

- It highlights which guidelines, clauses, or historical cases informed the answer.

- It routes sensitive cases to human experts.

- It incorporates corrections into future responses through human in the loop signals.

Over time, the Enterprise LLM earns the right to handle low risk tasks autonomously, while experts handle edge cases. This is not black box automation. It is a designed, governed maturity path.

From Foundational Models to Enterprise LLMs

Foundational models are engines.

Enterprise LLMs are engineered intelligence systems built around those engines.

Choosing the right foundational model depends on:

- Licensing and IP posture

- Deployment options, including on prem

- Latency and throughput

- Cost profile under real usage

- Multilingual reasoning ability

- Residency and compliance constraints

The foundational model matters, but the Enterprise LLM architecture that surrounds it determines whether the system is adoptable in real workflows.

RAG vs Agentic Al: When Each Method Wins

In Tericsoft's internal R&D across FinTech, HealthTech, and SaaS projects, a pattern became clear.

RAG is excellent, but not always sufficient.

Agentic Al is powerful, but not always stable.

Both have strengths and weaknesses.

RAG: Retrieval Augmented Generation

RAG is ideal when:

- You need grounded, cited answers

- Policies, contracts, and clauses drive decisions

- The workflow is fairly structured

- Compliance requires traceability

- You want to update context without retraining

RAG is predictable and works like a well defined architecture.

It retrieves the right information and limits hallucinations.

Agentic Al

Agentic Al involves reasoning chains, planning steps, and autonomous decision trees. It is ideal when:

- The workflow is dynamic

- The sequence of steps changes based on context

- The model needs to explore, observe, and adapt

- Multi step tool interactions are required

In our internal experiments, we found agentic approaches outperform RAG in workflows like:

- Complex financial reasoning

- Multi document cross referencing

- Real time decision routes where context changes mid execution

The Hybrid Reality

Most mature Enterprise LLMs use a hybrid model:

- RAG for grounding and compliance

- Agentic reasoning for adapting and sequencing actions

- Governance for safety and risk

- HITL for sensitive outcomes

This combination is often the difference between a useful Al and a production ready Enterprise LLM.

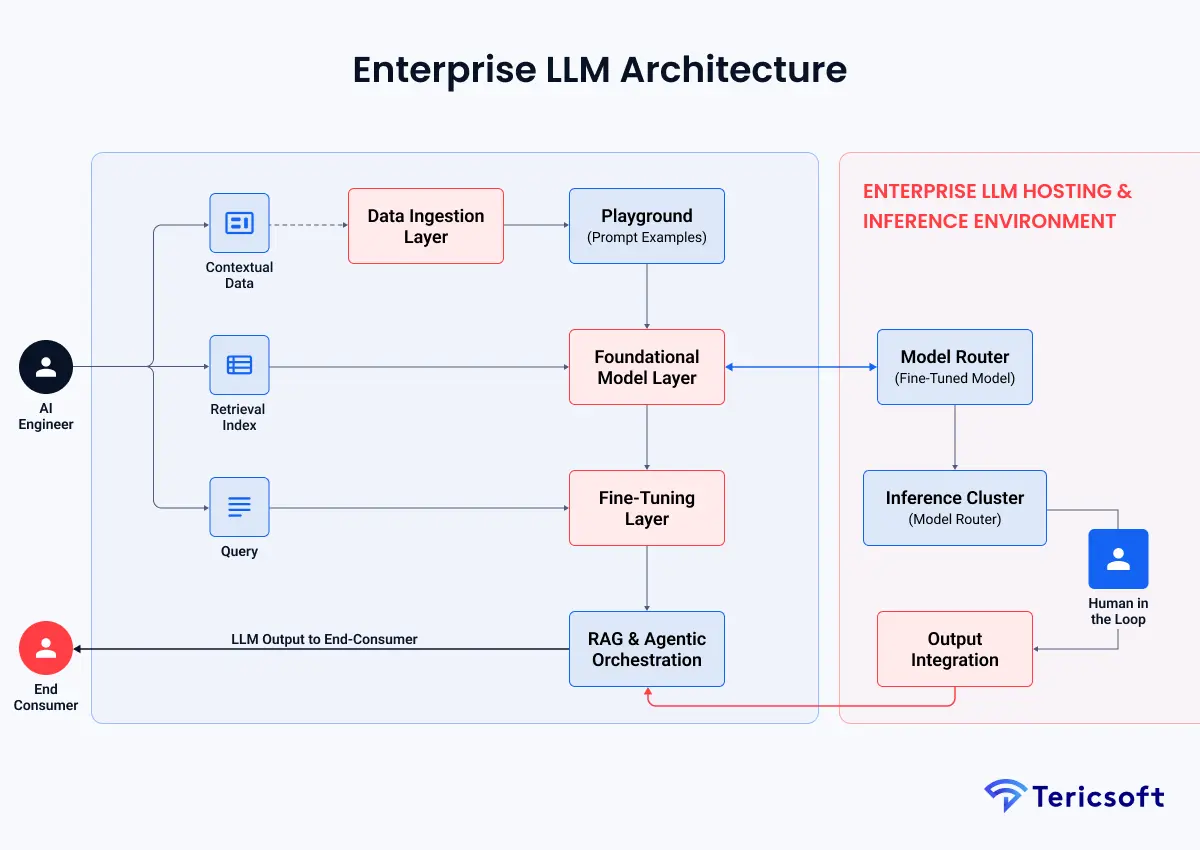

Architecture of an Enterprise LLM: A FinTech Example

To understand how an Enterprise LLM actually works, let us walk through a FinTech scenario:

Automated KYC review and underwriting assistance.

Below is a simplified high level architecture that reflects the systems Tericsoft commonly designs.

1. Data Ingestion Layer

The enterprise collects:

- KYC documents

- Transaction histories

- Risk scores

- CRM notes

- Regulatory texts

- Product policies

- Prior cases and outcomes

These flow into a secure ingestion pipeline where documents are normalized, encrypted, and versioned.

2. Governance and Access Control

Before anything touches the model:

- PII detection runs

- Sensitive fields are masked based on role

- Residency rules determine where data is stored

- Access policies are mapped against IAM

3. Retrieval Index

A multi store retrieval layer is built:

- Vector indexes for semantic memory

- Keyword indexes for legal clauses

- Structured stores for transactions

- Graph indexes for relationships

This retrieval layer becomes the enterprise's collective memory.

4. Foundational Model Layer

A chosen foundational model, such as Claude, GPT, or Mistral, sits at the center. This model is deployed inside the enterprise VPC.

5. Fine Tuning Layer

Fine tuning datasets are created from:

- Past underwriting memos

- Ideal KYC summaries

- Analyst corrections

- Escalation notes

- Policy interpretations

The foundational model becomes an Enterprise LLM aligned to the institution's voice, risk, and workflows.

6. RAG and Agentic Orchestrator

This is where intelligence forms.

- RAG retrieves the correct KYC document sections, prior cases, and risk rule clauses.

- The agentic layer sequences the workflow:

- Validate identity

- Extract risk signals

- Compare against guidelines

- Draft a summary

- Route to HITL if needed

7. Human in the Loop (HITL)

Analysts see the draft, correct it, approve it, or escalate it.

Their corrections are logged and fed back as:

- Retrieval signals

- Prompt improvements

- Fine tuning samples

8. Output Integrations

Once approved, outputs flow to:

- CRM

- Ticketing systems

- Underwriting engines

- Customer communication channels

This is the operational loop of an Enterprise LLM. Over time, the system becomes a continuously learning intelligence layer.

Contextual Retrieval: Memory for the Enterprise LLM

Contextual retrieval is the nearest thing to giving an Al institutional memory. Enterprises store enormous context across:

- SOPs

- Legal contracts

- Customer documents

- CRM trails

- Logs

- Emails

- Past cases

- Internal wikis

RAG is what allows an Enterprise LLM to draw from these sources at the exact moment it needs them. This is why regulated industries rely so heavily on retrieval. It provides the grounding and auditability they require.

Fine Tuning: From General Reasoning To Domain Mastery

Fine tuning shapes the behavior of the Enterprise LLM.

It teaches the model how your analysts speak, how they write memos, how they interpret rules, and how they escalate decisions.

If retrieval is memory, fine tuning is personality and judgment.

Human In The Loop: The Engine Of Trust

No enterprise Al should run without supervised guardrails.

Human in the loop ensures:

- Sensitive decisions never go unsupervised

- The Enterprise LLM earns trust step by step

- Quality improves over time

- Every correction becomes training data

HITL is not a constraint.

It is the governance system that makes Enterprise LLM adoption possible.

Implementation Roadmap For Enterprise LLM Deployment

A practical journey for CTOs and Al leaders:

- Data ingestion and governance

- Infrastructure choice

- Contextual retrieval and indexing

- Fine tuning and prompt governance

- HITL and evaluation

- Continuous learning and observability

This roadmap takes you from prototypes to production systems.

Enterprise LLM vs Public LLM API

Experiment with public LLMs.

Operationalize with Enterprise LLMs.

Use Case Blueprints Across Industries

FinTech

Enterprise LLMs act as intelligent copilots embedded directly into regulated financial workflows.

They can review KYC documents, extract key fields, validate identity information, and detect inconsistencies that analysts typically catch only after manual review. For underwriting teams, the model can read through long financial statements, risk reports, and transaction histories to draft structured underwriting summaries in minutes. In AML workflows, the Enterprise LLM can generate initial suspicious activity narratives, correlate entities, and reference specific regulations or past cases that match the current pattern.

Regulatory teams use the Enterprise LLM to perform real time regulatory lookup, pulling precise clauses from RBI, FCA, SEC, DFSA, and region specific guidelines with citations. Customer facing teams benefit from deeper customer intelligence, where the LLM synthesizes lifetime behavior, spending patterns, product usage, and past service interactions into a unified brief.

The result is a faster, more compliant financial operation where analysts spend time making decisions, not preparing context.

Healthcare

Enterprise LLMs help clinicians and operations teams reduce administrative load without compromising accuracy or compliance.

When reviewing clinical cases, the LLM can analyze doctor notes, lab results, imaging reports, and guideline documents to create first pass summaries that adhere to clinical language and standards. For guideline lookup, the Enterprise LLM retrieves the exact section from clinical protocols, cites it inline, and explains why the guideline applies. This builds trust in environments where traceability is mandatory.

In prior authorization workflows, the Enterprise LLM assembles all required evidence from EHR notes, past documentation, and patient history, which reduces back-and-forth cycles between payers and providers. For medical documentation, the model assists doctors by generating structured drafts for discharge summaries, operative notes, or clinical histories based on dictated or typed notes.

Healthcare teams experience shorter turnaround times, cleaner documentation, and more consistent adherence to clinical guidelines.

Retail

Retailers operate in high velocity environments where product knowledge, customer intent, and operational decisions shift daily. Enterprise LLMs enhance retail workflows by acting as always updated knowledge engines.

Product Q&A copilots help both customers and internal support teams by answering questions about size, compatibility, materials, warranty, and delivery timelines by referencing product catalogs, past queries, and inventory data. Merchandising teams get real time insights into product performance, content gaps, customer reviews, and competitor trends synthesized into crisp, actionable briefs.

For returns triage, the Enterprise LLM reads customer messages, return reasons, order histories, and policy documents to classify cases, detect fraudulent patterns, and recommend the correct resolution path. It can also generate journey summaries for customer service teams, showing what the customer has bought, returned, rated, browsed, or asked about across the lifespan of their relationship.

This leads to faster resolutions, reduced return abuse, and higher customer satisfaction.

SaaS

SaaS companies deal with rapidly evolving codebases, documentation, and customer tickets.

Enterprise LLMs become internal copilots that bridge engineering, product, and support.

Internal knowledge copilots unify tribal knowledge scattered across Slack, Confluence, GitHub, product specs, and release notes. Developers can ask questions like “How did we solve this bug last year?” or “Where is the logic for billing retries?” and get grounded answers with citations.

Support teams benefit from ticket summarization that turns long customer conversations, logs, and attachments into structured insights. The Enterprise LLM can also draft first responses, suggest fixes, or flag high risk cases based on sentiment and customer tier.

Documentation teams use the model to generate and update product guides, changelogs, and release notes by analyzing commits, PR descriptions, and technical discussions. This ensures product documentation always keeps pace with development velocity.

SaaS orgs experience cleaner knowledge flows, faster onboarding, and significantly reduced support cycle times.

Future Outlook: Liquid LLM and Self Updating Intelligence

Enterprise LLMs today already deliver massive efficiency gains, but the next phase of evolution will remove the biggest bottleneck in AI adoption: the cost and friction of keeping models up to date. Traditional retraining cycles are expensive, slow, and infrequent. Enterprises often discover that their model’s understanding of their business lags behind reality by weeks or months.

The emerging direction, often referred to as Liquid LLM, changes that rhythm completely. Instead of treating learning as a quarterly event, Liquid LLM architectures introduce lightweight, continuous adaptation loops that run quietly in the background.

Systems of this kind can:

- Continuously update retrieval indexes as new documents, logs, and conversations enter the enterprise.

- Learn from daily feedback, incorporating approvals, edits, corrections, and customer interactions.

- Evolve prompt templates automatically, refining tone, structure, and safety rules based on what produces the best outcomes.

- Adapt fine tuning weights in micro-updates, without waiting for a full retraining cycle.

The result is an Enterprise LLM that does not sit still.

It mirrors the pace of the business, becoming sharper every day.

This is the underlying idea behind Liquid LLM, Tericsoft’s vision for continually improving intelligence systems.

The future of enterprise AI is not static models.

It is self-updating intelligence that improves while your teams sleep.

Every Company Will Run Its Own Al Brain

"Every company is a software company."

— Satya Nadella, CEO of Microsoft

For the last decade, this meant that digital transformation was no longer optional.

In the era of Enterprise LLMs, this statement evolves into something much deeper.

Every serious organization, whether in finance, healthcare, retail, logistics, or SaaS, will soon operate its own AI brain, a private intelligence layer that understands its customers, its data, and its workflows better than any external system ever could.

Not a rented AI.

Not a public API that shares infrastructure with thousands of others.

But a sovereign Enterprise LLM:

- Hosted on private cloud or on prem

- Enforced with enterprise governance and risk controls

- Infused with internal knowledge, policies, and history

- Continuously improving through real-time feedback

- Capable of supporting teams across functions without leaking context

This shift is structural.

It separates enterprises that operate with real-time intelligence from those that still operate on static playbooks and historical dashboards.

Companies that build their AI brain now will compound improvement month after month.

Companies that delay will begin to feel slower without recognizing why their competitors seem to move with supernatural clarity and speed.

The enterprises that thrive will be the ones that invest early in their own Enterprise LLM, not someone else’s.

About Tericsoft: Your Partner for Enterprise LLMs

Tericsoft builds private, domain engineered Enterprise LLMs for FinTech, HealthTech, Saas, and global enterprises. We use leading foundational models, frameworks like LangChain and Llamalndex, and architecture patterns that combine retrieval, fine tuning, agentic reasoning, HITL, and continuous learning.

We support:

- Enterprise LLM strategy and design

- Contextual retrieval and RAG systems

- Fine tuning pipelines

- Agentic workflow design

- Governance and monitoring

- On prem and private cloud deployments

- Self updating intelligence architectures like Liquid LLM

Conclusion: Al At The Scale Of Electricity, Privacy At The Scale Of Enterprise

AI has reached the same order of magnitude as electricity during its early days.

It is not merely another technology trend.

It is a foundational shift in how work, decision-making, and knowledge will exist inside organizations.

Electricity reshaped every industry silently, consistently, and permanently.

AI is beginning to do the same but with one critical difference.

Enterprises cannot simply plug into a public grid. They must operate with sovereignty, compliance, and full control over their data.

True enterprise value emerges only when intelligence is:

- Private

- Governed

- Aligned to workflows

- Grounded in internal data

- Continuously improving

This is exactly what Enterprise LLMs provide.

They deliver the full power of generative AI while honoring the privacy, safety, and regulatory expectations of modern enterprises.

As leaders think about their future, the central question becomes simple.

Not “Should we adopt AI?”

But “How do we build our own AI brain?”

When you are ready to explore that journey, Tericsoft is here to help architect, deploy, and evolve your Enterprise LLM.