What is Generative Al, how does it evolve from simple prediction to the creation of original high-value content, and why is it now critical for enterprises seeking scalable, automated decision-making across operations?

Generative Al (GenAl) represents a foundational shift in architectural software design: moving enterprises from Systems of Record to Systems of Creation. At its core, GenAl is a category of artificial intelligence that utilizes deep learning architectures to synthesize original data by modeling the underlying statistical distributions of massive datasets. While traditional Al is built for classification and deterministic prediction, GenAl acts as a probabilistic reasoning engine capable of generating high-fidelity text, code, and multimodal content.

For senior leadership, the transition is structural: it is the move from analyzing historical snapshots to deploying autonomous agents that can reason through ambiguity, iterate on complex logic, and execute end-to-end workflows in real-time.

"Al is more profound than fire or electricity."

— Sundar Pichai, CEO of Google

What is Generative Al?

Generative Al is a subset of deep learning that utilizes generative models to produce data that is statistically indistinguishable from its training set. By leveraging advanced architectures like Transformers, Diffusion, and Flow-based models, GenAl maps complex human intent (prompts) to high-dimensional latent spaces to produce coherent outputs.

Unlike discriminative models that learn the boundary between classes (e.g., distinguishing a fraudulent transaction from a legitimate one), generative models learn the actual distribution of the data. This allows them to sample from that distribution to create entirely new instances.

From a technical perspective, the enterprise value lies in the transition from rule-following machines to probabilistic reasoning engines. This enables businesses to solve unstructured problems, such as drafting complex legal documents or generating production-ready code, at a scale and speed previously considered impossible. It represents the move from deterministic software to stochastic systems capable of handling high-entropy variables across the business value chain.

How Does Generative Al Work?

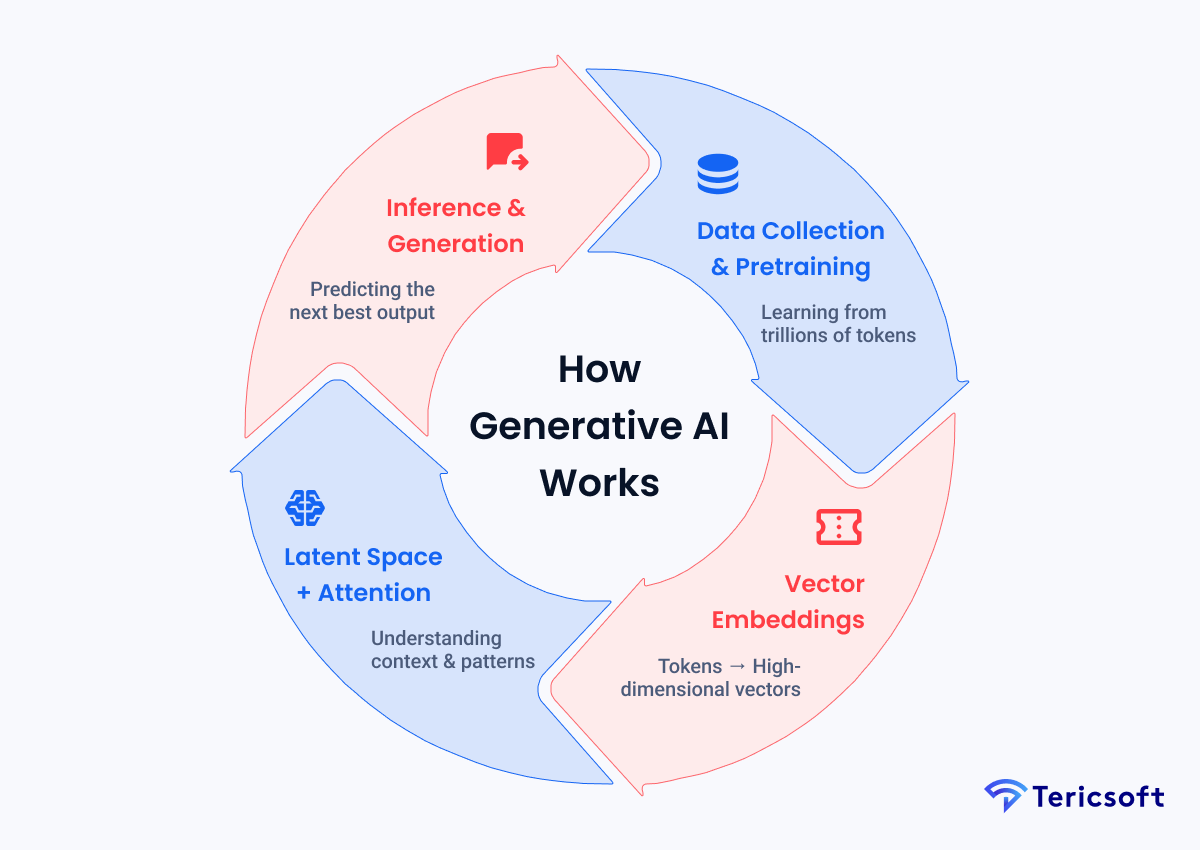

GenAl operates by mapping data into a high-dimensional mathematical space known as a latent space. During the training phase, the model learns the relationships and patterns between millions of data points (tokens). When a user provides a prompt, the model performs inference, navigating this latent space to predict the most probable sequence of data that satisfies the input criteria.

In Large Language Models (LLMs), this is accomplished through vector embeddings: numerical representations of semantic meaning. Every word or "token" is converted into a vector in a space with thousands of dimensions. The model does not understand content in the human sense; instead, it identifies the statistical likelihood of what should come next based on the mathematical proximity of tokens in its trained vector space.

This process relies heavily on the "Attention Mechanism," which allows the model to weigh the importance of different tokens in a sequence relative to one another. This enables the system to maintain long-range context, ensuring that the generated content remains grounded in the structural logic of the initial prompt while feeling original.

Core Building Blocks of Generative Al

Building a production-ready GenAl system requires an understanding of four specific architectural layers:

- Neural Networks and Deep Learning: The compute layer where multi-layered architectures, typically based on the Transformer architecture, process non-linear data relationships through massive parallelization.

- Large-Scale Pretraining: The process of ingesting trillions of tokens (text, code, or pixels) to build a foundation model. This phase establishes the model's "world knowledge" and syntactic understanding.

- Fine-Tuning and Alignment: The engineering phase where a general model is restricted and optimized for specific enterprise domains. Techniques like PEFT (Parameter-Efficient Fine-Tuning) or LoRA (Low-Rank Adaptation) allow for domain specialization without the massive compute overhead of full retraining.

- Inference and Decoding: The runtime phase where the model processes inputs. Optimizing this layer is critical for reducing latency and token costs. Techniques such as quantization (reducing numerical precision) or model distillation (training a smaller "student" model from a larger "teacher") are essential for enterprise-grade deployments.

What Sets Generative Al Apart?

The defining characteristic of Generative Al is its move from pattern recognition to pattern synthesis. Traditional Al identifies "is this a cat?"; Generative Al "imagines a cat in a spacesuit." This shift enables zero-shot and few-shot learning, where a model can perform tasks it was never explicitly trained for by simply referencing its foundational knowledge.

For a CTO, the zero-shot capability is a game-changer. It means you no longer need to build and maintain a custom model for every single micro-task. One powerful foundation model, properly prompted or fine-tuned, can handle everything from technical documentation and sentiment analysis to customer support reasoning and code generation. It consolidates the technical stack from dozens of narrow models into a single, versatile reasoning engine.

"We are at the iPhone moment of Al."

— Jensen Huang, CEO of NVIDIA

Types of Generative Al Models

Success in Al engineering depends on choosing the right architecture for the specific technical bottleneck you are solving.

Variational Autoencoders (VAEs)

VAEs learn the latent representation of data by compressing it (encoding) and reconstructing it (decoding). They are primarily used for anomaly detection and creating high-fidelity synthetic data for testing. While they are compute-efficient, they often produce blurry results compared to modern architectures, making them best for backend data integrity tasks.

Generative Adversarial Networks (GANs)

GANs use a competitive framework where a Generator and a Discriminator train each other in a zero-sum game. While GANs produce incredibly realistic images, they are notoriously difficult to train due to "mode collapse." They remain relevant for niche visual tasks and data augmentation in medical imaging but have been largely surpassed for general text tasks.

Transformer-Based Models

The current industry standard for text and reasoning. Transformers use a self-attention mechanism to weigh the importance of different parts of an input sequence regardless of their distance. This allows for deep context retention. Their primary trade-off is the quadratic scaling of compute costs as the context window increases, which requires sophisticated engineering to manage long-form data.

Diffusion Models

Diffusion models have replaced GANs for most high-quality image and video generation. They work by iteratively removing Gaussian noise from a signal until a clear image emerges. They offer superior training stability and creative control, though they typically require more inference steps, which can lead to higher latency in real-time applications.

The Market Insight: Search interest for diffusion models has significantly outpaced VAEs and GANs. This reflects a global enterprise pivot toward multimodal generation. With research momentum peaking in Hangzhou and Beijing, the focus is shifting toward Video- as-a-Service and high-fidelity creative automation where the ROl is driven by the total elimination of manual asset production.

How Does Generative Al Differ from Other Types of Al?

To define a strategic Al roadmap, it is essential to distinguish between the various paradigms of artificial intelligence. While traditional machine learning (ML) has focused on pattern identification and rigid decision-making, Generative Al introduces an iterative layer that changes the fundamental relationship between the user and the software.

Generative Al vs Predictive Al

Generative AI vs Traditional Chatbots

Benefits of Generative Al

The integration of GenAl provides a multi-layered advantage that extends beyond simple automation:

- Algorithmic Content Scalability: Decoupling high-value output from human labor hours. Enterprises can generate vast quantities of personalized marketing assets, technical documentation, and code stubs instantaneously.

- Knowledge Institutionalization: Using RAG (Retrieval-Augmented Generation) to turn static internal documentation into an interactive, reasoning knowledge base that can answer complex internal queries.

- Hyper-Personalization at Scale: Delivering unique customer experiences that adapt to specific user behaviors in real-time. According to BCG, companies using GenAl for marketing report a 30% improvement in conversion rates through high-velocity content testing.

- Synthetic Data Generation: Creating high-fidelity synthetic datasets to train other ML models in environments where real-world data is scarce, expensive to acquire, or legally sensitive.

- Engineering Force Multiplication: Accelerating software development cycles. Research from GitHub shows that developers using Al assistants complete tasks 55% faster than those who do not.

- Operational Efficiency in Services: In customer service operations, GenAl implementation has led to a 14% increase in issue resolution per hour, as reported by NBER, specifically helping newer workers bridge the performance gap with experienced counterparts.

Challenges of Generative Al

Engineering a production-ready system requires managing specific technical trade-offs that go beyond the hype:

- Hallucinations vs Grounding: Models can confidently generate false data. Solving this requires RAG architectures to ground the model in verified, private data via vector databases.

- Latency vs Quality: Larger models provide better reasoning but slower response times. CTOs must often choose between a "smart" slow model for complex logic and a "fast" lean model for real-time UI/UX.

- Data Privacy: Protecting proprietary data requires building secure pipelines (often VPC-based or on-premise deployments) where enterprise data is never leaked into public training sets.

- Token Economics: Managing the context window is a financial necessity. Optimizing prompt engineering with caching and prompt compression is the new standard for cost control in scaled systems.

Applications of Generative Al in Business

Generative Al in SaaS & Software Development

Al is shifting the developer's role from writing syntax to reviewing logic. Copilots handle boilerplate code and unit testing, while Al-driven testing suites generate edge-case scenarios that human testers might overlook, significantly increasing code coverage.

Generative Al in Marketing & Sales

GenAl allows for mass-personalization at a granular level. An enterprise can deploy 10,000 ad versions, each tailored to the specific psychological profile and past behavior of the individual lead, drastically improving conversion rates.

Generative Al in Healthcare

Beyond clinical summaries, GenAl is being used to fold proteins and simulate molecular interactions. Research indicates GenAl could unlock a $60 billion to $110 billion annual value for the pharmaceutical industry by accelerating drug discovery cycles.

Generative Al in FinTech

In finance, GenAl generates synthetic market crashes to stress test portfolios under conditions that have never occurred. It also creates personalized wealth management reports that synthesize real-time market fluctuations with a client's specific risk tolerance.

Generative Al in E-commerce

Al creates high-quality product visuals and virtual try-on experiences, reducing photography costs while increasing conversion rates through personalized visual recommendations that adapt to user preferences.

Real Business Impact of Generative Al

ROl is found in the compression of the value chain. Startups are launching full platforms with 1/10th of the traditional headcount, while enterprises are seeing 40% productivity gains in content and coding departments. The impact is a total realignment of the digital workforce, moving from "Execution" to "Orchestration."

"Generative Al will reshape how every team operates"

— Clara Shih, CEO of Salesforce Al

Why Choose Tericsoft: How We Help You Win with Generative Al

Tericsoft is an execution-first technology partner. We do not just provide high-level strategy; we provide the engineering infrastructure to move from a prompt to a production platform. Our approach is built on solving the core technical trade-offs of Al: cost, latency, and accuracy.

Choosing Tericsoft means choosing a partner with a Product-First mindset. We provide the engineering muscle to build and scale secure, enterprise-ready architectures that protect your data while maximizing the potential of the latest models.

Al Services

We offer comprehensive implementation through our Al Services, ranging from custom model development to RAG-based internal tools. We ensure your Al strategy is aligned with your business objectives and technical infrastructure, focusing on creating a "Data Moat" for your organization.

CTO as a Service for Al Strategy

Navigating the Al hype cycle requires technical leadership. Through our CTO as a Service, we provide the strategy for data governance, model selection (Open Source vs. Proprietary), and infrastructure roadmapping to ensure your Al investment delivers long- term value.

Technology Partner for Scale

For established enterprises, we manage the complex integration of GenAl into existing legacy stacks. As your Technology Partner, we build the secure data pipelines, vector databases (Pinecone, Weaviate, Milvus), and inference layers needed for enterprise-grade tools.

When Should a Business Adopt Generative Al?

The moment for adoption is when your business encounters high-volume, unstructured data bottlenecks. If your team is spending thousands of hours on repetitive creation, Al adoption is the only way to scale without a linear increase in headcount. Waiting for the technology to "mature" is no longer a viable strategy as the gap between adopters and laggards is widening every day.

Best Practices for Enterprise Generative Al Adoption

Start by identifying high-impact, low-risk use cases: typically internal productivity tools. Build a robust "Human-in-the-Loop" workflow to verify Al outputs before they reach the customer. Most importantly, focus on your data moat. The most powerful Al is the one that is grounded in your company's unique, private data. Iterate fast with MVPs and partner with experts who have navigated the integration hurdles of large-scale deployments.

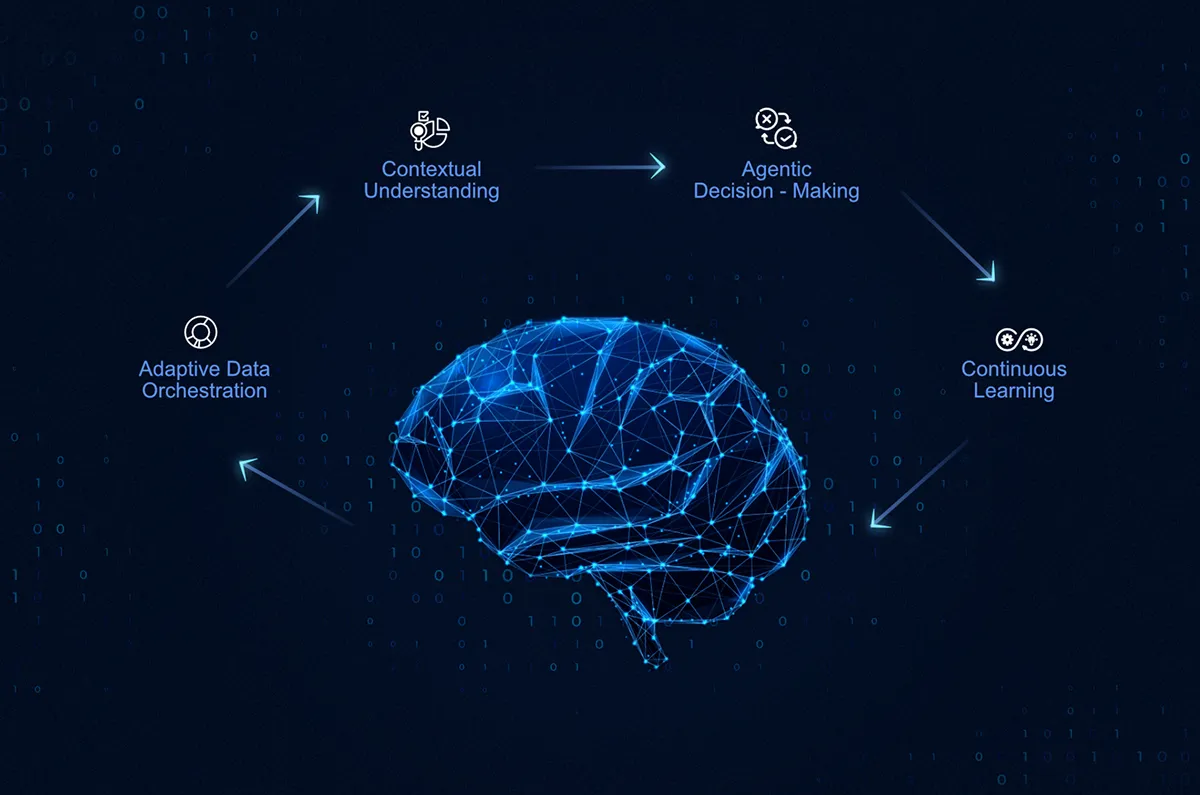

The Future of Generative Al

The future is Agentic Al. We are moving from models that "talk" to models that "do." Soon, Al agents will not just draft a plan; they will access your tools, coordinate with other agents (multi-agent systems), and execute the entire project autonomously. Every enterprise will eventually run on a personalized, digital brain that manages the business lifecycle from ideation to scale.