January 31, 2026

5 minutes read

Top 10 AI Use Cases in Fintech for B2B Enterprises in 2026

Top 10 Fintech AI Use Cases • 1. Fraud Detection • 2. Credit Scoring • 3. KYC Automation • 4. AML Monitoring • 5. AI Payments • 6. Personalized Finance.

Anand Reddy KS

CTO & Co-founder at Tericsoft

January 31, 2026

12 minutes read

PCI + GenAl: Build a Zero-Card-Data System for Payments

A Zero Card Data system for payments is a strategy that keeps PAN out of GenAI prompts, logs, and pipelines so the AI stays card blind.

Anand Reddy KS

CTO & Co-founder at Tericsoft

January 26, 2026

13 minutes read

Top 10 LLM + RAG Architectures for FinTech Operations

Discover top 10 LLM + RAG architectures for FinTech operations that eliminate hallucinations, ensure regulatory compliance, and power audit-ready AI.

Mohamed Hamza Tumbi

Digital Marketer at Tericsoft

January 17, 2026

12 minutes read

What Is SAP and How Do Businesses Use SAP as an ERP Platform?

SAP ERP is an enterprise software platform that integrates finance, operations, supply chain, and HR into a single real-time system of record.

Abdul Rahman Janoo

Co-founder & CEO at Tericsoft

January 12, 2026

12 minutes read

Data Privacy in LLM: How Enterprises Secure AI Data at Scale

Data privacy in LLMs describes the architectural controls enterprises use to protect data during AI reasoning, retrieval, and autonomous decision-making.

Anand Reddy KS

CTO & Co-founder at Tericsoft

January 6, 2026

9 minutes read

Top 10 AI Services Companies in 2026 for Enterprise AI Adoption

Top 10 AI Services Companies • 1. Tericsoft • 2. Accenture • 3. IBM Consulting • 4. Deloitte • 5. Cognizant • 6. Tata Consultancy Services.

Mohamed Hamza Tumbi

Digital Marketer at Tericsoft

December 28, 2025

9 minutes read

AI Agents: The Operating Layer of the Modern Enterprise

AI agents are autonomous, goal-driven systems that reason, plan, and act across enterprise workflows to execute tasks using data and tools.

Anand Reddy KS

CTO & Co-founder at Tericsoft

December 28, 2025

10 minutes read

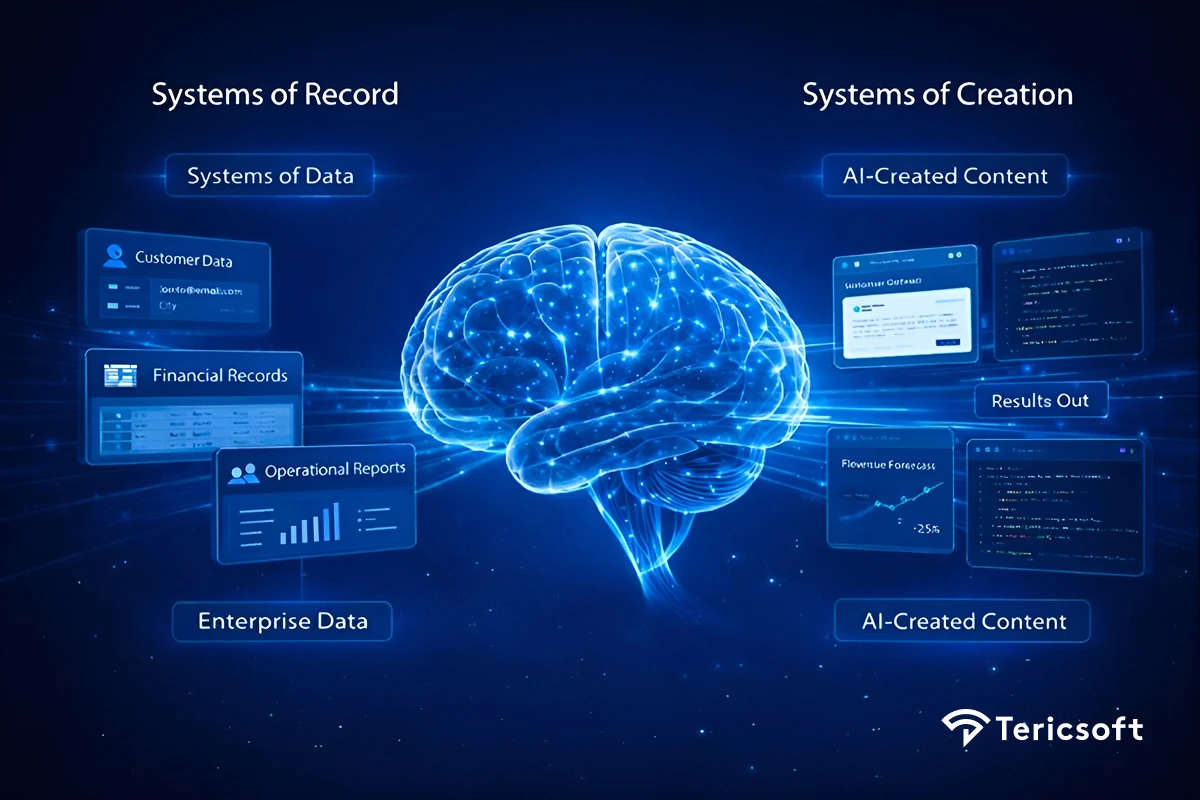

Generative AI: From Systems of Record to Systems of Creation

Generative AI is an advanced form of artificial intelligence that uses deep learning to generate original text, code, images, and multimodal content.

Anand Reddy KS

CTO & Co-founder at Tericsoft